I set up a local AI LLM to run and then conducted some experiments. This post shows a bit about the experiments and a little code. The setup is on my blog using Docker to containerize an Ollama LLM, which you can read about.

The Basic Setup

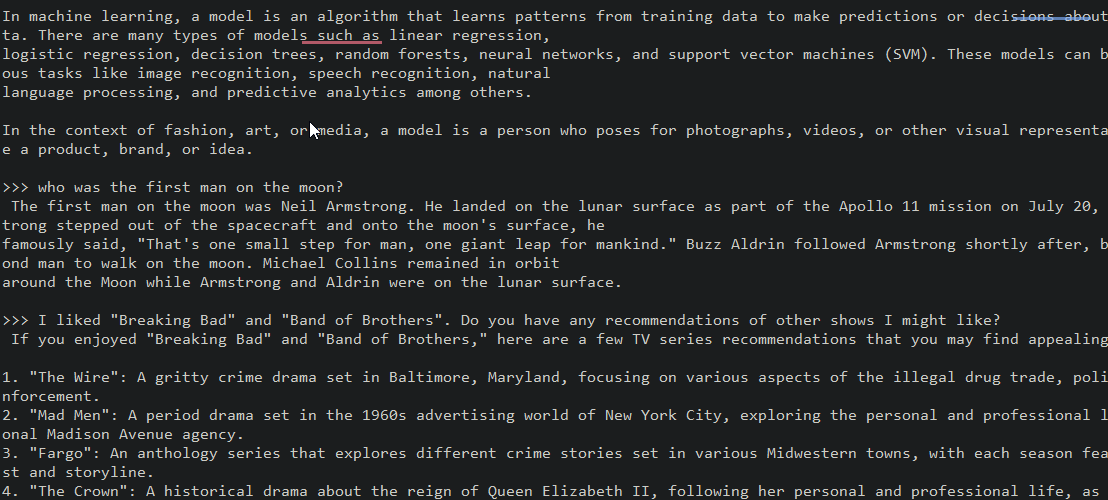

If you read my blog, I downloaded an Ollama container image, ran it, and downloaded the mistral model and ran it. Interatively, I was able to query the mode as I would ChatGPT or any other LLM. You can see the results below from my first few queries.

From here, I disconnected