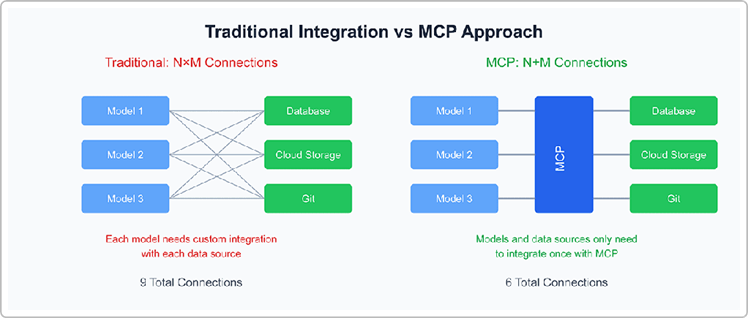

Large Language Models (LLMs) like Anthropic’s Claude have unlocked massive context windows (up to 100k tokens in Claude 2) that let them consider entire documents or codebases in a single go. However, effectively providing relevant context to these models remains a challenge. Traditionally, developers have resorted to complex prompt engineering or retrieval pipelines to feed external information into an LLM’s prompt. Anthropic’s Model Context Protocol (MCP) is a new open standard designed to simplify and standardize this process. Think of MCP as the “USB-C for AI applications” – a universal connector that lets your LLM seamlessly access external data, tools, and